Thank you for stopping by to read The Common Reality. Every Sunday, I drop a new article on Engineering Management, AI in the workplace, or navigating the corporate world. Engagement with these articles helps me out quite a bit, so if you enjoy what I wrote, please consider liking, commenting, or sharing.

Last week, I shared a sample chapter from a field manual I’m writing, which outlined what effective communication during an incident looks like. In today’s post, I am going to share a story that I’ve reflected on several times because it was not my finest day.

Because I have learned from this story, I wrote out this section of the chapter:

The tone of the updates matters. A chaotic-sounding update creates more stress. A dry, vague one can come across as dismissive. The Incident Manager’s goal is calm, factual, and confident, even if everything behind the scenes is still uncertain. The Incident Manager needs to control the narrative.

Avoid being the author of an update that has already decided they going to be fired for this incident. Incidents can be very stressful events, but very rarely does a single incident result in the termination. Even the Apple Executive directly responsible for the disaster that was the initial rollout of Apple Maps wasn’t going to be fired for that incident until he refused to apologize for the rollout.2

Firing off ad hoc email updates with incomplete information and emotional undertones will be career-limiting for those who opt to go down that path. The Incident Manager who demonstrates ownership over the situation, details the steps that are being taken to move toward resolution, acknowledges the impact on other teams/customers, and regularly updates their stakeholders will absolutely be viewed as someone that needs to be retained.

With that backdrop, let’s dive in. Names, roles, and a few details have been updated to protect the identities of others in the story.

Losing my cool

It was a Friday morning, because stuff like this only happens on Friday mornings. I had been at my desk for only a few minutes when I started getting message notifications from Carl, a Director in a different business line.

Carl: Can you join this incident call? We have a SEV1.

Me: *groaning becaue my week was already off target and today I was going to catch-up* Sure, send over the details.

I joined the incident call and found it to be very…empty. A SEV1 level incident is a “all hands on deck” situation, and this call was not that. I announced myself and asked for the ticket so I could get up to speed. The first red flag is what I got instead.

Carl: Hmm, I don’t know if we have a ticket. I can just give you an overview of the issue. A build server that we use for our API Platform is offline and we haven’t been able to push changes to production. Do you have an engineer that could troubleshoot or could we connect to your build server? The vendor gave us the installation guide.

Me: OK, my team supports similar build server and I’m happy to have an engineer take a look, but do you have any idea what might have taken your build server offline?

Carl: We aren’t sure and the vendor isn’t helping us out.

Me: Alright, let me bring a few members of the team in.

At that point, I was very skeptical about the situation. Not having a ticket, but referencing a SEV1 was odd. The vendor not getting involved was also odd. This all sounded like it was being intentionally left under the radar. Hoping for a quick resolution so I could get on with my day, I brought over two engineers who could start asking questions about the build server setup.

Around an hour later, after asking many questions, Carl was getting frustrated. I didn’t appreciate the tone that he started to take with my team because a) this was his incident, and b) his engineers didn’t seem to know the basics of this build server’s setup. With tensions rising, Carl jumped straight to what he wanted:

Carl: We need to connect to your build server. Your team needs to get the vendor’s packages installed on it.

Me: I’m not in agreement and I don’t want to rush into installtion because I am not clear on what the issue is here. I cannot put my build server into the same state.

Carl: Well this is a SEV1 incident and a priorty platform so we need to do it.

Me: I think we need Melinda (my Manager) and Gary (Carl’s Manager) to weigh in.

I sent a message to Melinda to jump on a new call with Carl and Gary. I knew what the outcome would be, though - Melinda would opt to support Carl’s issue. She was a company-first decision maker. Maybe, this one time, Melinda would push back, and I could disconnect from this incident.

Fast-forwarding through a predictable call: Melinda stayed true to form, and my team was now putting all work on pause to get these packages installed and configured. As this was a SEV1 incident, we were working at a frantic pace. My team was great, and they demonstrated fantastic flexibility. We got the packages installed after several hours and ran some regression testing to ensure we didn’t take down all our services.

Proudly, I messaged Carl about the availability of the installation and that we could point the API Platform at our build server.

Carl: Oh…thanks, but we don’t need that any more. We think we can get the changes into production without the build server.

Me: Can you join my call so I can get updated on this incident? I don’t think I am following what changed.

Carl: I will join when I can, using your build server was just a backup idea we had.

My blood pressure likely broke world records upon reading that last message from Carl. My whole day was wasted. My team’s day was also wasted. I was already stressed about the week not going the way I wanted, and this disaster was dropped right on top of it.

I instructed my team to drop from the call. I thanked them endlessly for their effort and apologized that it seemed like it was in vain. And then I waited. And I waited. Around 45 minutes passed without Carl joining the call, so I messaged Carl, Gary, and Melinda asking them to join so we could discuss the incident, as I was not happy1 about the situation.

Melinda and Gary joined shortly after my message. Gary then messaged Carl, who finally joined.

I went off, hard.

I demanded that someone2 senior be held accountable for the way this incident played out. I demanded complete transparency into the root cause analysis being performed so I could understand the true extent of Carl’s team’s technical ineptitude. I asked Carl how he would like to handle our partnership going forward, as I no longer trusted anything he had to say.

Melinda attempted to pull me back, but I was furious. Stress from the whole role was coming out - a “straw that broke the camel’s back” situation. I finished and was met with only silence on the call. After a few moments, Melinda (correctly) ended the call.

I got a direct call from Melinda shortly after.

Melinda: Drew you absolutely cannot lose yourself like that. While you are right, things like this can spiral out of control.

Drew: I don’t think being “nice” works in this situation. If I just sent an email saying I was unhappy, Gary would have just moved on.

Melinda: It doesn’t matter, you need to control your emotion in the future.

Drew: Fine, I am sorry.

I wanted to debate Melinda here, but I was emotionally exhausted. It was far easier to accept the feedback.

Reflecting

It’s been many years since this situation. Thinking about it right now, as I write the article, still gets me enraged. I am now much more in control when faced with similar nonsense, but that's a skill I have to practice frequently. People who are overly emotional will not last long in leadership roles. They burn too hot.

Being in control emotionally may mean losing a battle to win a war. That’s the right long-term strategy.

How would you have handled that situation?

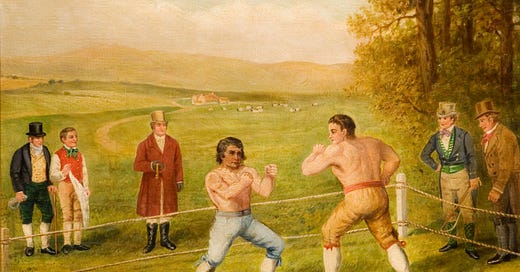

“Not happy” was the understatement of the century. I was ready for a physical altercation.

Carl is who I had in mind.